Deploying EVE-NG On Google Cloud Platform: Part 3

Enabling connectivity between EVE-NG topology nodes and Google Cloud Platform (GCP) VMs.

In Part 1 of this blog series, we covered the step-by-step procedure for installing EVE-NG (eve-ng.net) on Google Cloud Platform (GCP). In Part 2, we looked at how to spin up a simple topology in EVE-NG, consisting of Arista EOS and Juniper vMX routers.

However, it is very likely that you might want to connect your EVE-NG topology to external VMs that reside in your GCP environment. For example, you might want to send Syslog messages to an existing Splunk server in your GCP lab, or perhaps you might want to send streaming telemetry from a Juniper vMX to an existing Telegraf collector that is running on a GCP VM. This type of access is not enabled by default and requires a little workaround in both EVE-NG and in GCP in order to make it work.

Enabling this access can be quite powerful, in that the topologies you spin up in EVE-NG can then serve as an extension of the existing infrastructure you might have deployed in GCP. This is very useful in testing and prototyping scenarios. So, with that in mind, in this third blog post of this series, I will walk through a simple approach that worked for me in enabling this bridging between the EVE-NG and GCP network domains.

Bridge Interfaces Created By EVE-NG

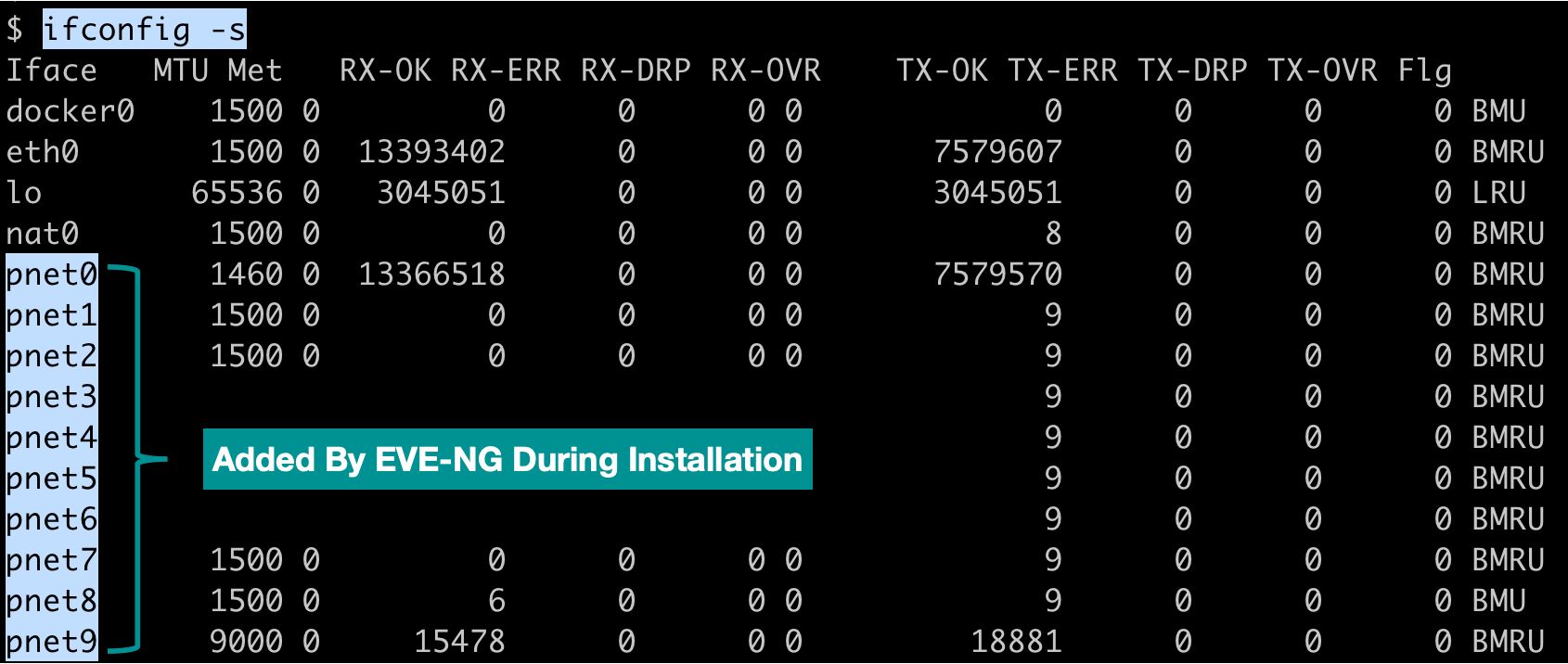

EVE-NG creates several bridge interfaces on the host VM during the installation process. Of particular importance are the interfaces named pnet0 through pnet9. If you login to your EVE-NG host machine and issue the ifconfig -s command, you will see these interfaces, as shown in the screenshot below.

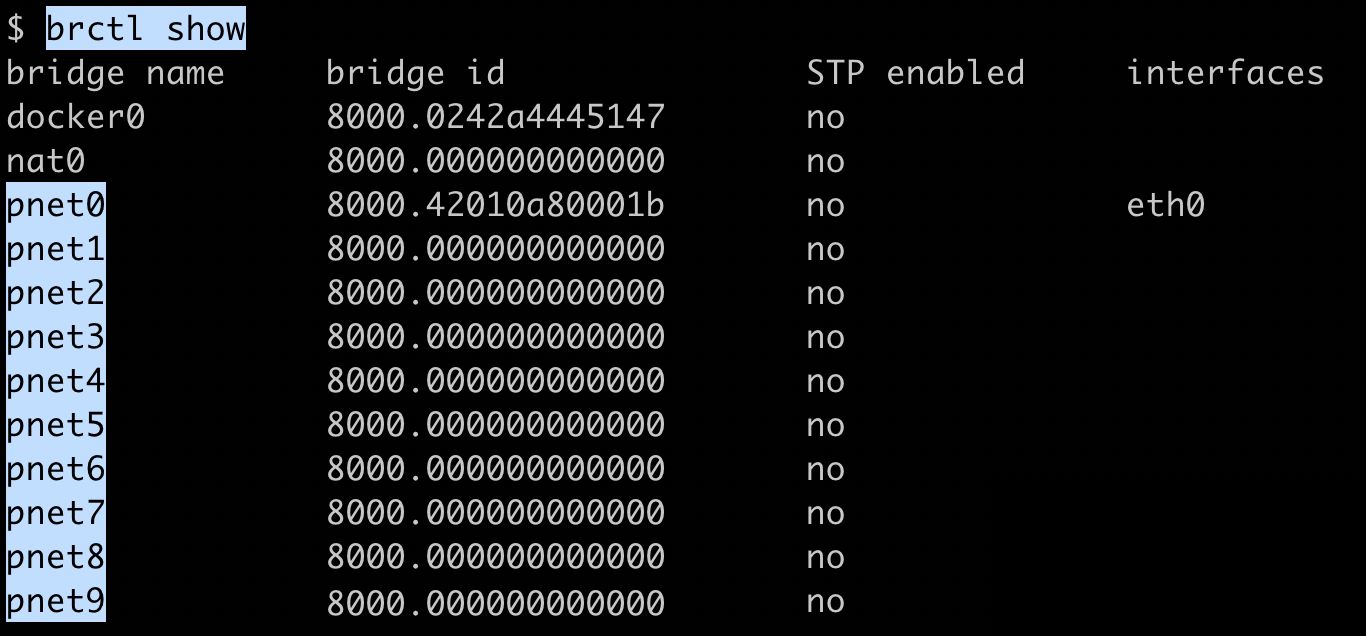

By issuing the brctl show command, we can see that these are indeed bridge interfaces ( see screenshot below). A bridge interface shows up in ifconfig or ip link alongside physical interfaces such as eth0, however they are just virtual interfaces that take packets from one physical interface and transparently routes them to the other interface(s) on the same bridge.

In the context of KVM/QEMU, a Linux bridge is used to connect the KVM/QEMU guest interface to the host network interface.

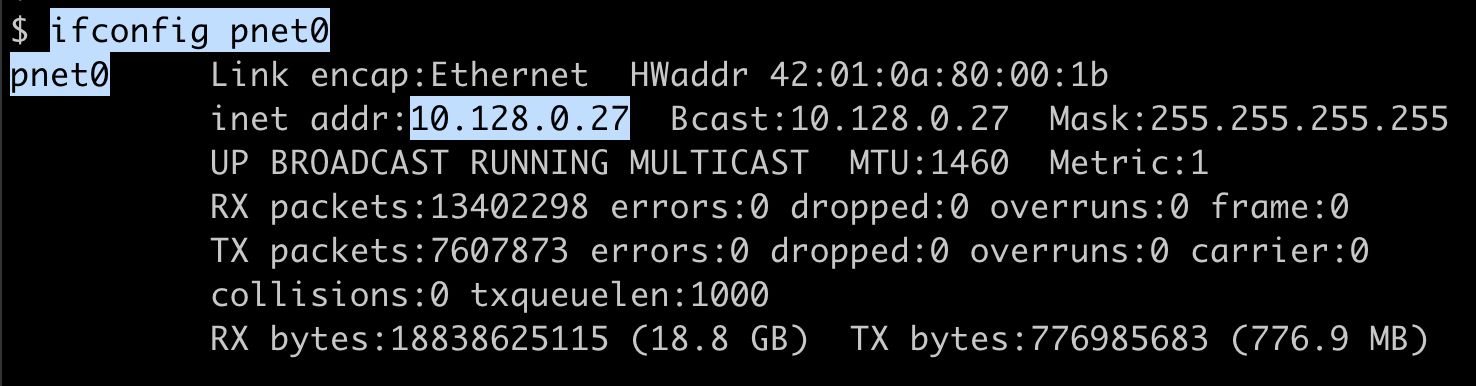

As shown in the screenshot above, bridge interface pnet0 is bridged with the primary physical EVE-NG ethernet port eth0. Furthermore, as depicted below, pnet0 is assigned the IP address (eg. "10.128.0.27") that is used for the EVE-NG Web GUI. This EVE-NG subnet can optionally be used as a management network in labs, but for my purposes, I wanted to keep the management IPs for EVE-NG nodes in a separate address space from my external GCP lab VMs. So, we are going to use a different bridge interface, other than pnet0 for the management network.

Mapping Bridge Interfaces To Cloud Interfaces In EVE-NG

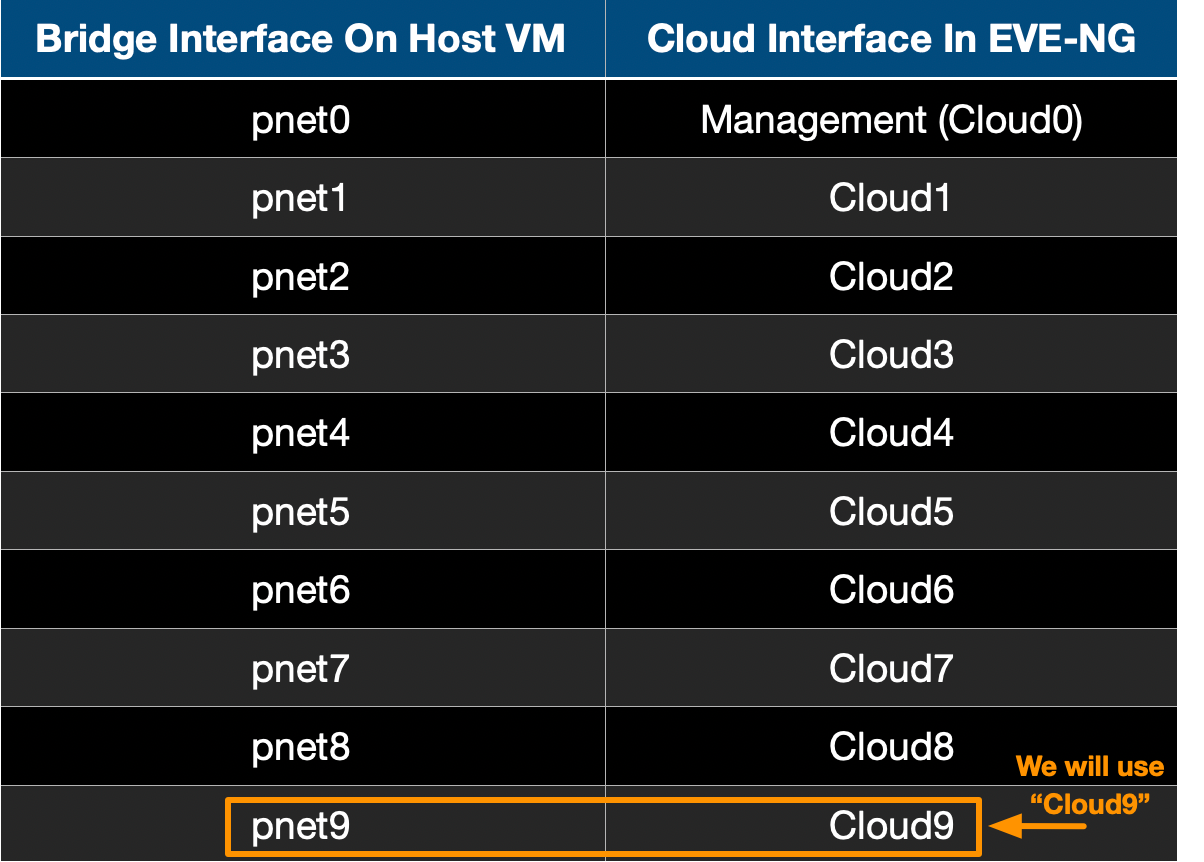

Inside of EVE-NG, there is a notion of "Cloud Interfaces", which have a one-to-one mapping to the bridge interfaces (pnet0 to pnet9) mentioned above. A summary of these mappings is shown in the table below.

In a nutshell, for EVE-NG, the word "Cloud" in the above table is used as an alias to "pnet". What we need to do in order to create a bridge between EVE-NG and GCP are the following steps:

- Select one of the "Cloud" interfaces from the above table to use inside the EVE-NG Topology Designer. As highlighted in the table above, we are going to work with bridge interface pnet9 (i.e. Cloud interface Cloud9).

- For the devices inside the EVE-NG topology that require GCP access, connect them to the selected "Cloud" interface (i.e. Cloud9).

- For the associated bridge interface on the host VM that corresponds to the Cloud interface (e.g. pnet9 for Cloud9), assign a static IP address (e.g. "192.168.249.1") from a designated management subnet (e.g. "192.168.249.0/24"). Be sure that this management subnet does not conflict with an existing subnet in your GCP lab infrastructure. This will serve as the "Gateway IP" for all the EVE-NG nodes connected to this management subnet.

- For each EVE-NG node requiring GCP lab access, configure a static IP address from the management subnet address space. Be sure to add a static route to the GCP lab subnet, using the management IP from (3) above as the next-hop address.

- In GCP, add a static route to this management subnet that points to your EVE-NG VM as the next hop.

Let's walk through the above five steps with a concrete example.

Creating The EVE-NG To GCP Bridge

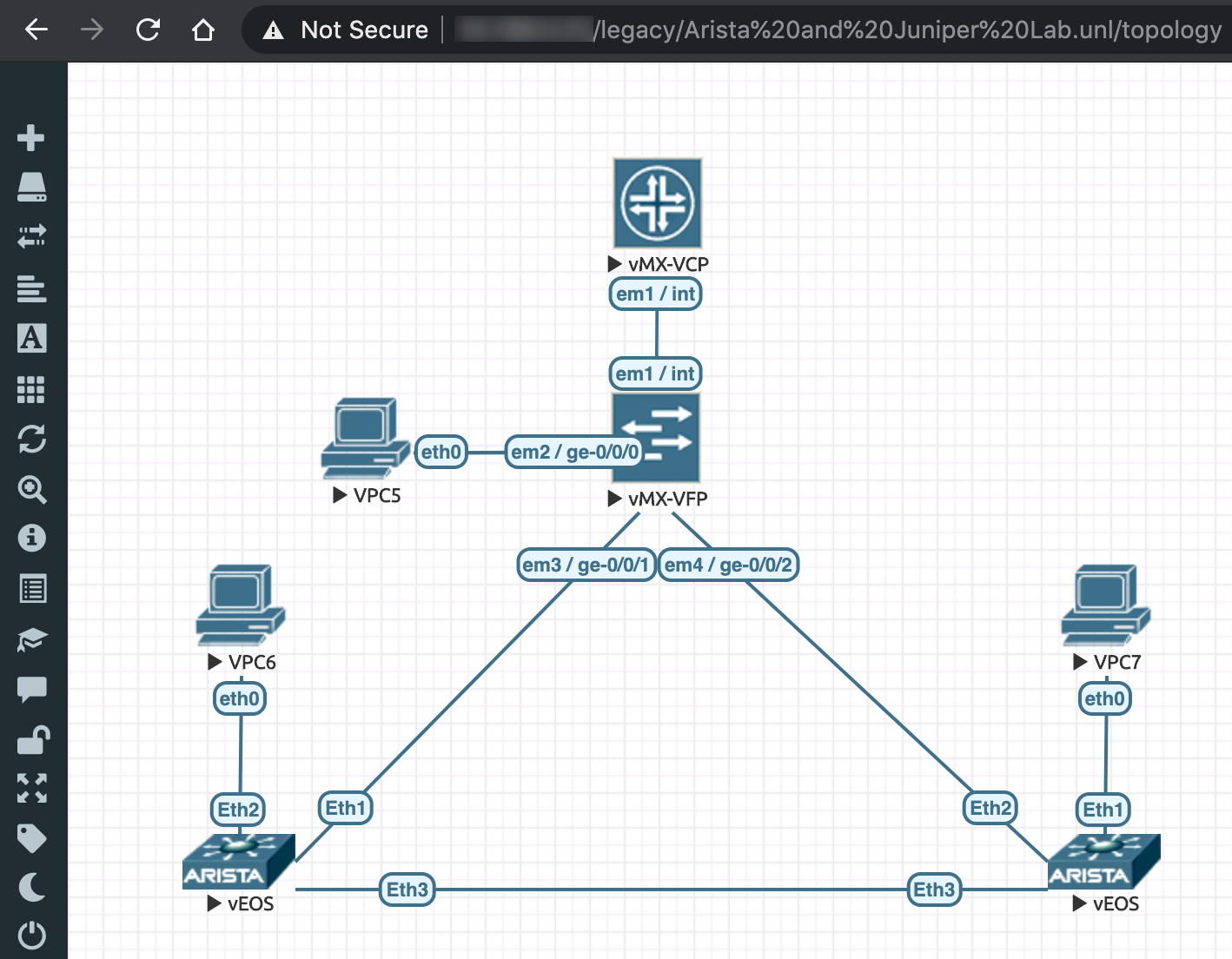

The EVE-NG topology that we were working on in Part 2 of this blog series is shown in the figure below.

What we want to do here is connect the 2 Arista vEOS routers and the 1 Juniper vMX router to a management subnet ("192.168.249.0/24") which has access to the external GCP VMs in the same GCP project where our EVE-NG VM resides.

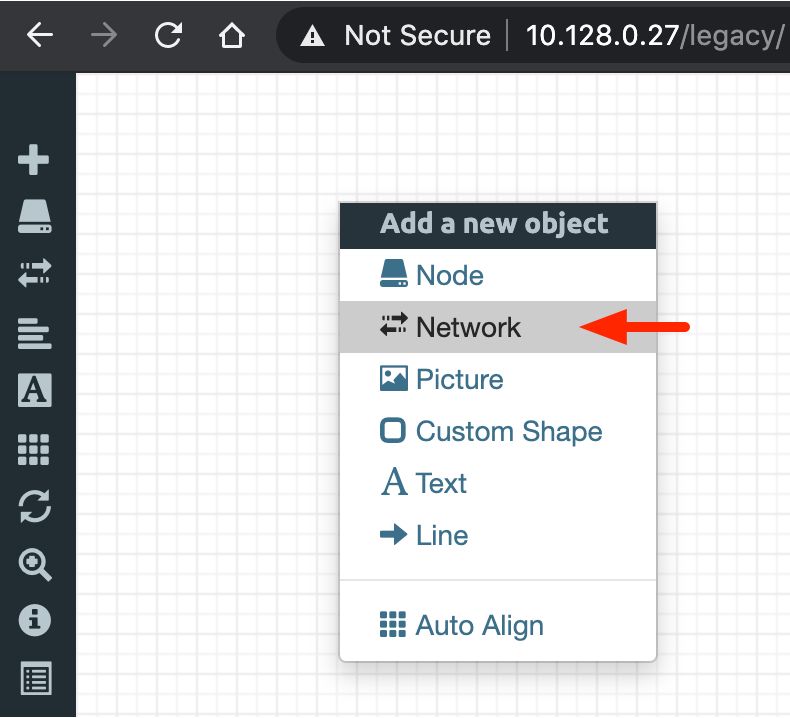

First, let's add Cloud9 to our network topology. To do this, right-click anywhere in the Topology Designer canvas and select "Network" from the "Add a new object" context-menu. This is shown in the screenshot below.

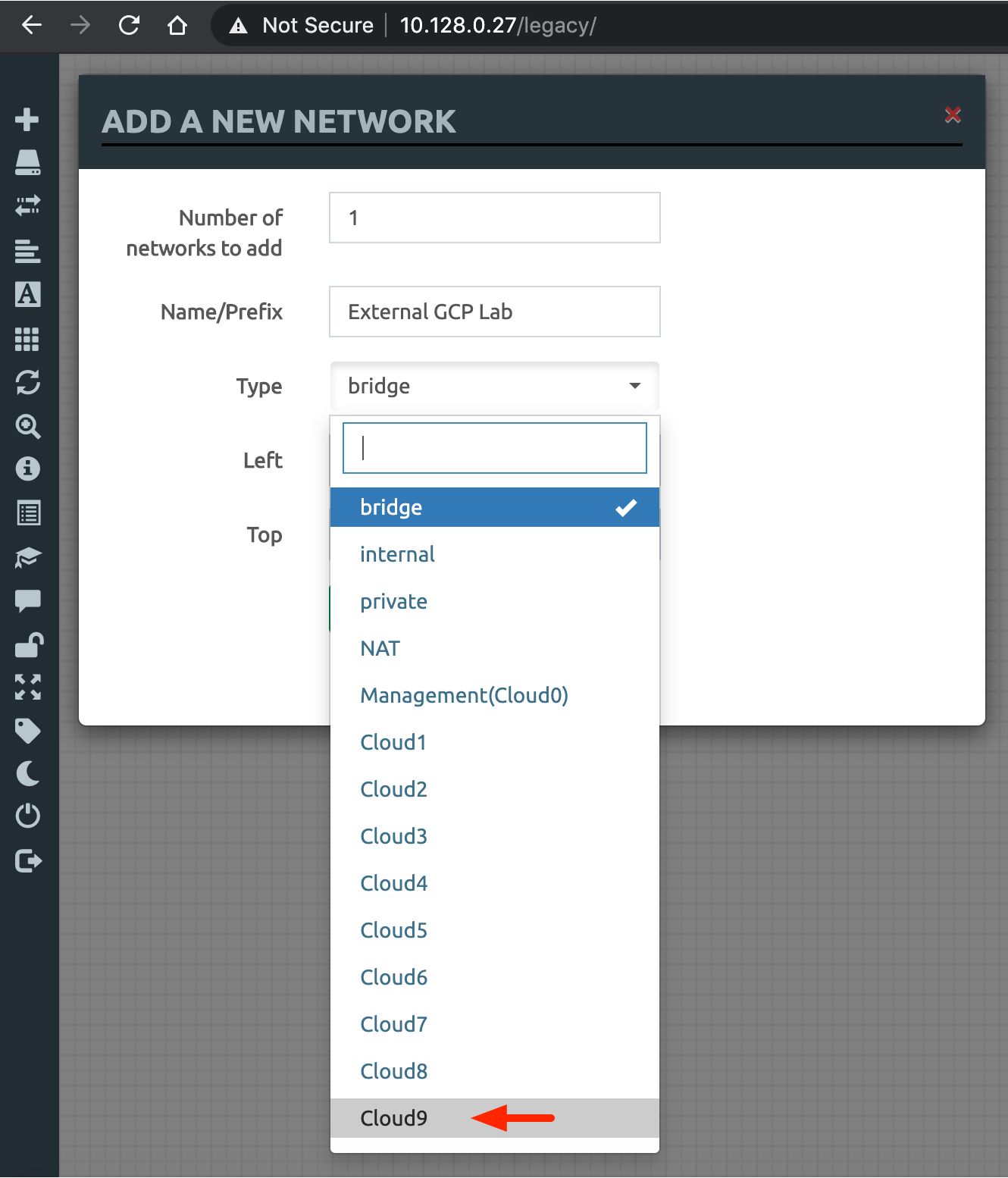

In the "Add A New Network" popup window that appears (screenshot below), select Cloud9 from the dropdown menu of the "Type" field. Optionally, give a descriptive name for the network in the "Name/Prefix" field (e.g. "External GCP Lab"). Click "Save" to continue.

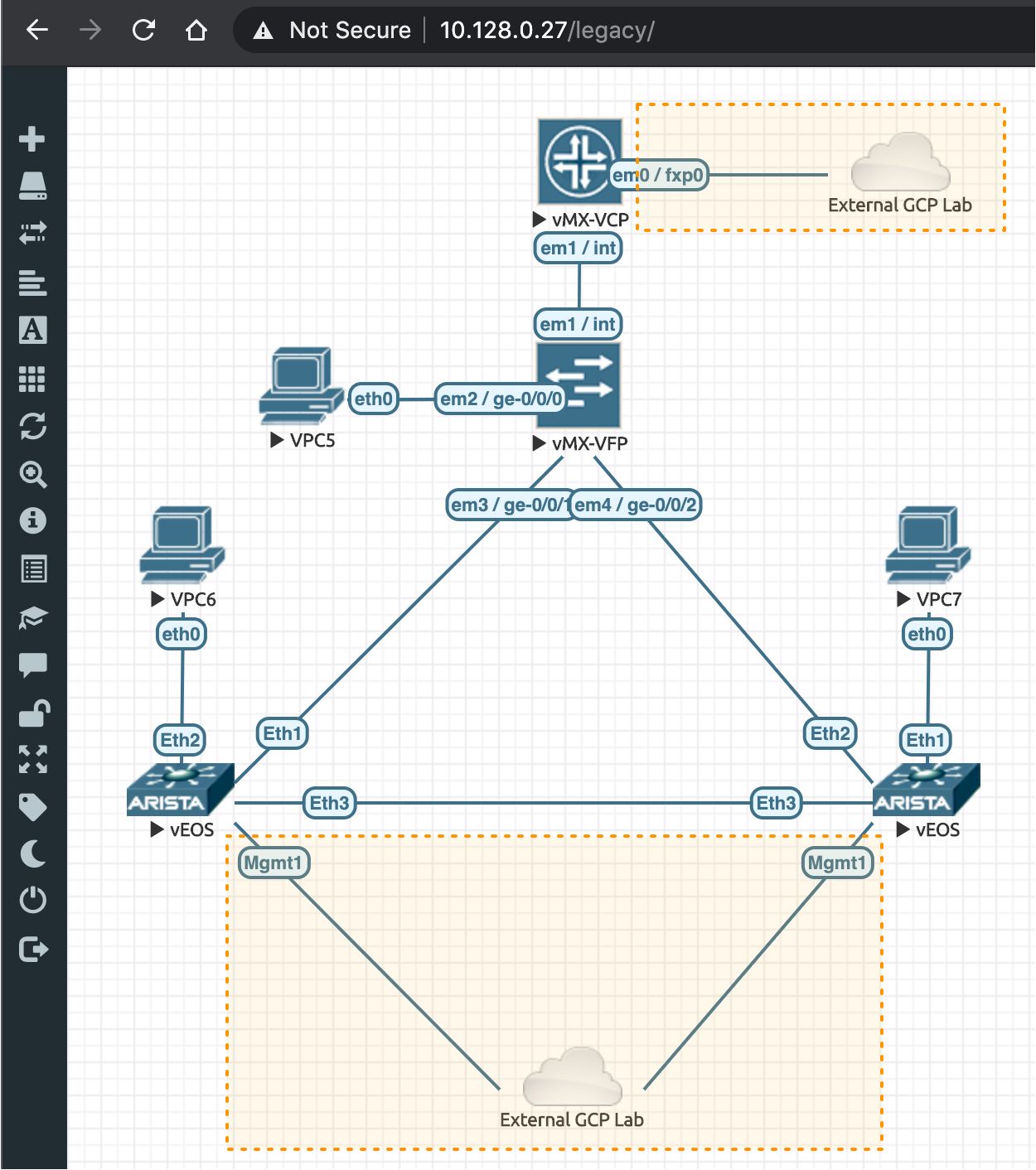

This results in an "External GCP Lab" cloud icon being dropped into our topology canvas. Next, we connect our three devices to this icon. Note that for layout/neatness reasons, we created a second "External GCP Lab" icon to facilitate the connections. For the Arista vEOS devices, be sure to select Mgmt1 as the interface. For the Juniper vMX device, be sure to make the connection from the VCP and to use em0/fxp0 as the interface. This is shown, highlighted in yellow, in the screenshot below.

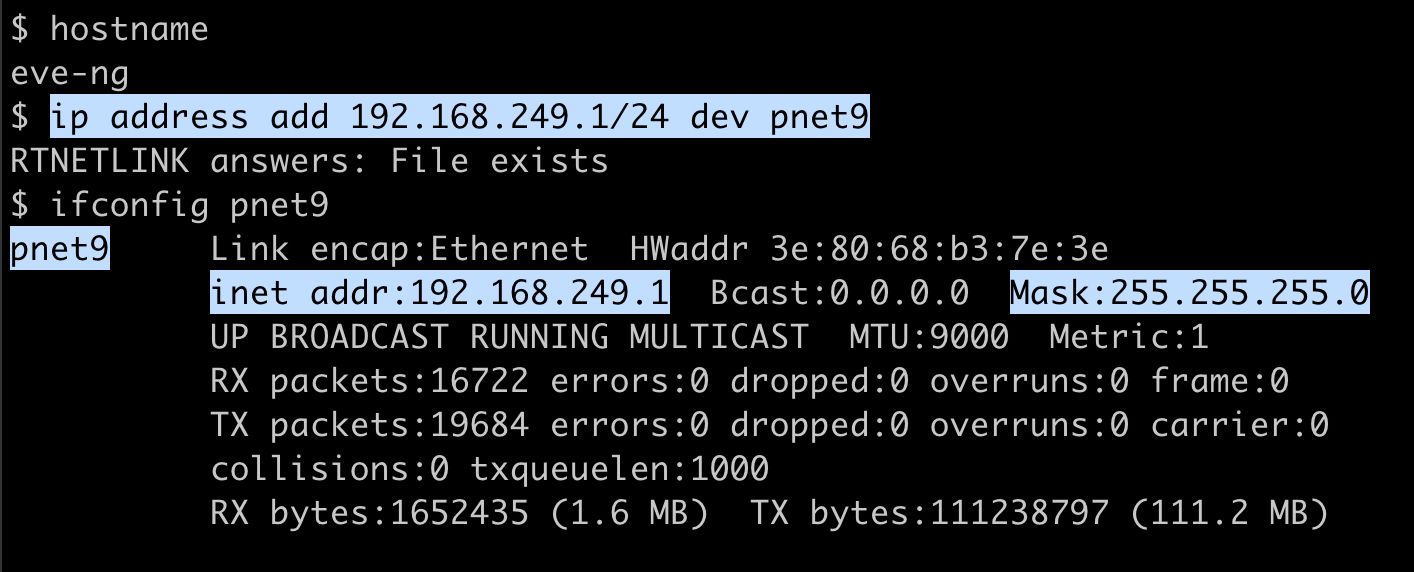

Recall that we used Cloud interface Cloud9 above for our topology's connection to external GCP VMs. Cloud9 maps to the bridge interface pnet9 on our EVE-NG VM. Let's now assign a static IP address (e.g. "192.168.249.1") from our designated management subnet (e.g. "192.168.249.0/24") to the pnet9 interface. This IP address will be our "Gateway IP" for our EVE-NG nodes. To do this, logon to the EVE-NG VM Linux shell and issue the ip address add 192.168.249.1/24 dev pnet9 command, as shown in the screenshot below. Verify that the IP address has been successfully configured using the ifconfig pnet9 command.

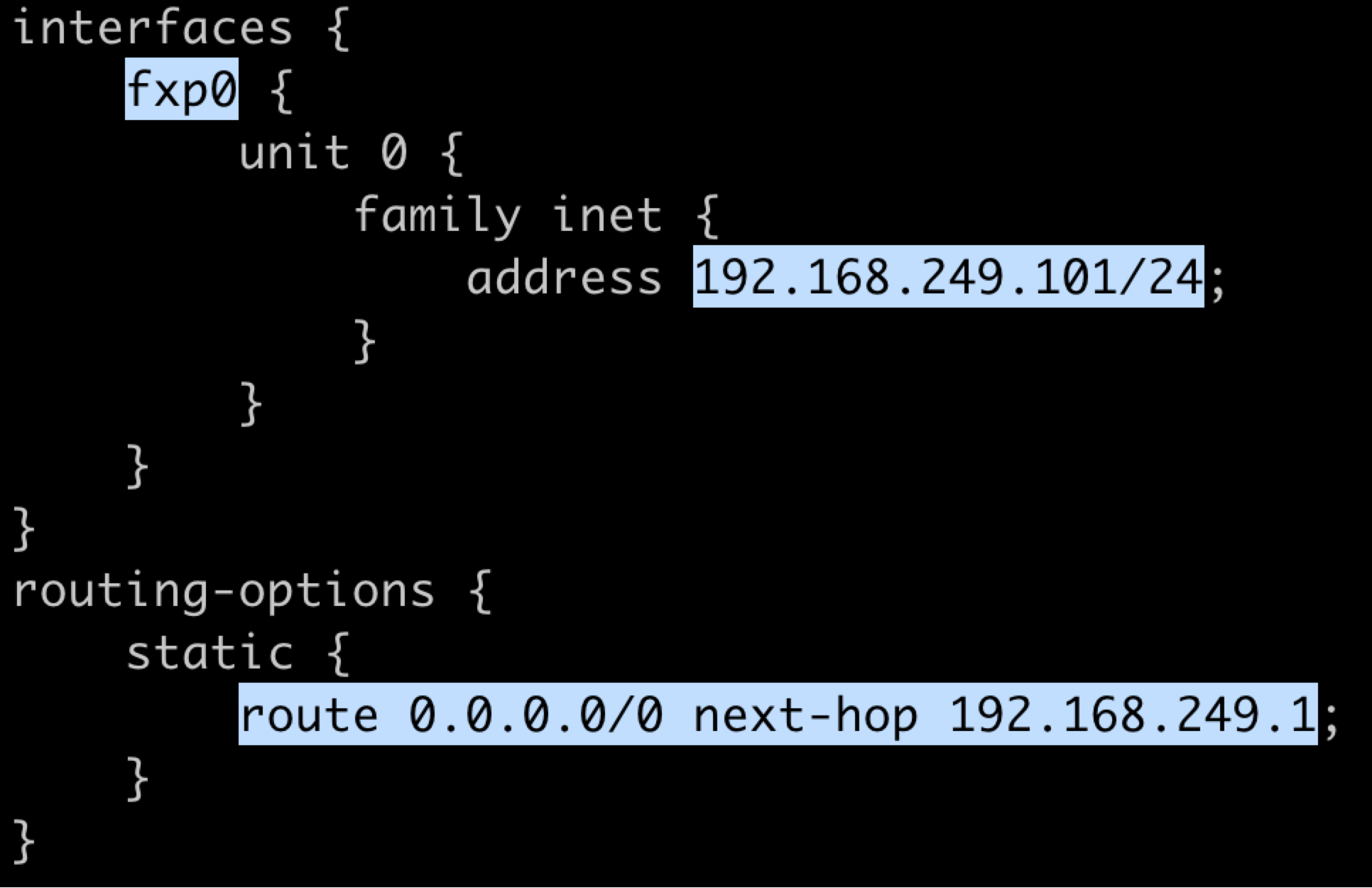

Next, let's configure a management IP address for each device that needs to access external GCP lab VMs. The screenshot below shows the simple config needed for the Juniper vMX. Highlighted are the management interface name (fxp0), the device's management IP address ("192.168.249.101/24"), and the default static route to the gateway IP ("192.168.249.1").

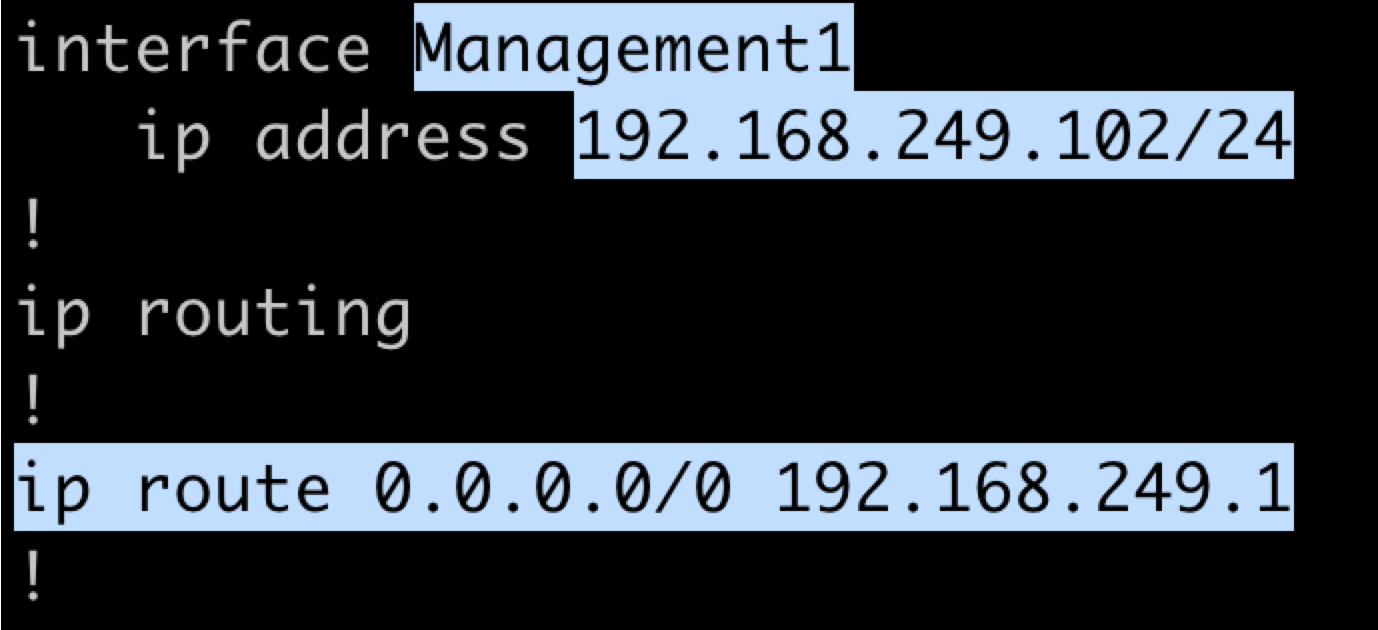

The screenshot below shows the config needed for the Arista vEOS. Highlighted are the management interface name (Management1), the device's management IP address ("192.168.249.102"), and the default static route to the gateway IP ("192.168.249.1").

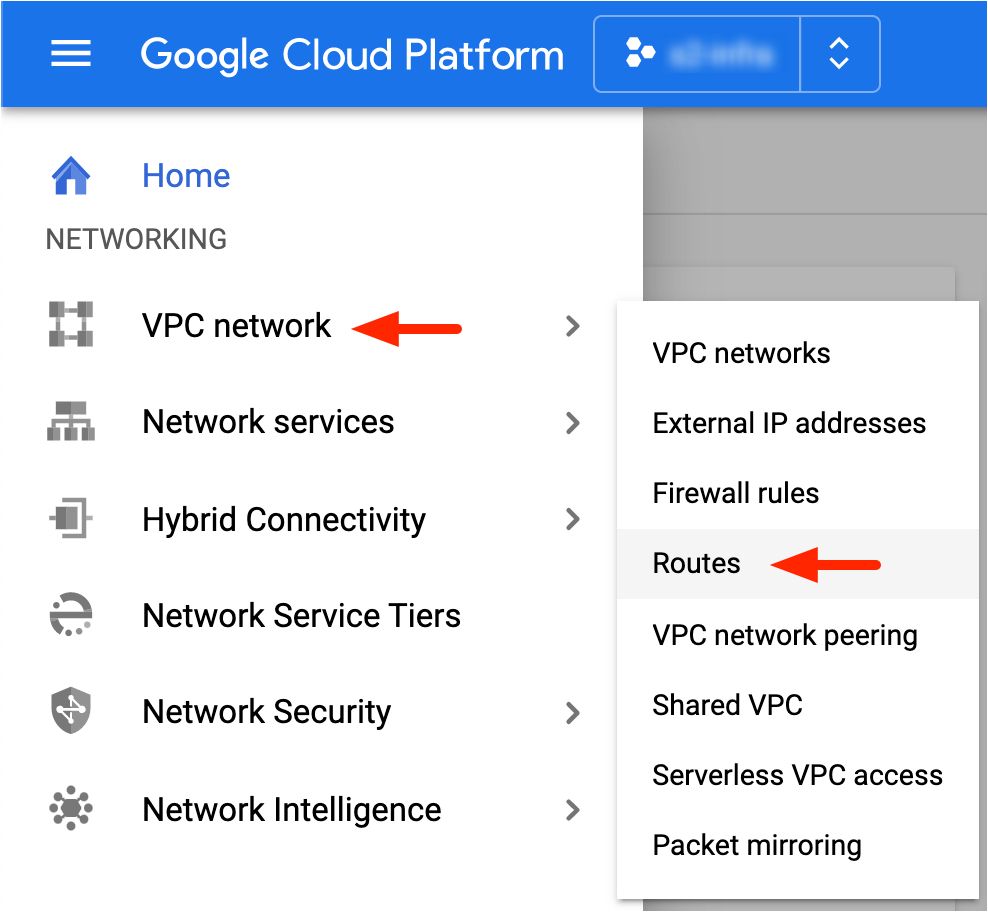

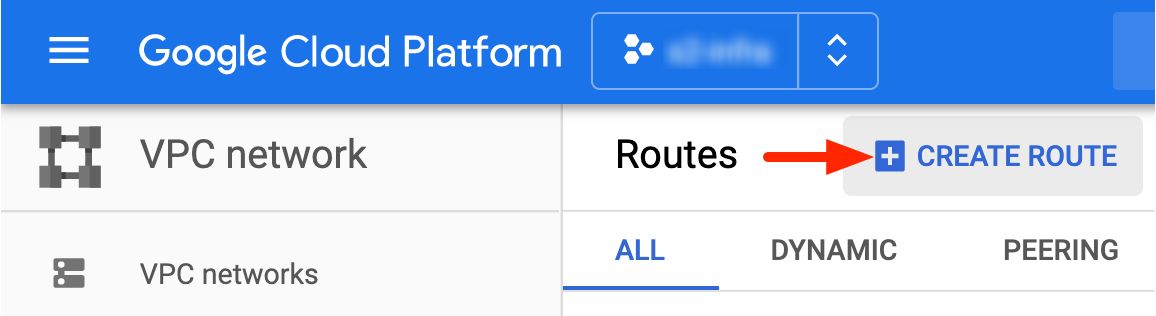

Our final step is to ensure that all of our GCP lab VMs know how to route to the 192.168.249.0/24 management subnet for our EVE-NG topology. To do this, we need to create a static route in our GCP project. From the GCP Console sidebar menu, select "VPC network -> Routes", as shown below.

Then, from the "VPC network" landing page, click on the "Create Route" button, as depicted in the screenshot below.

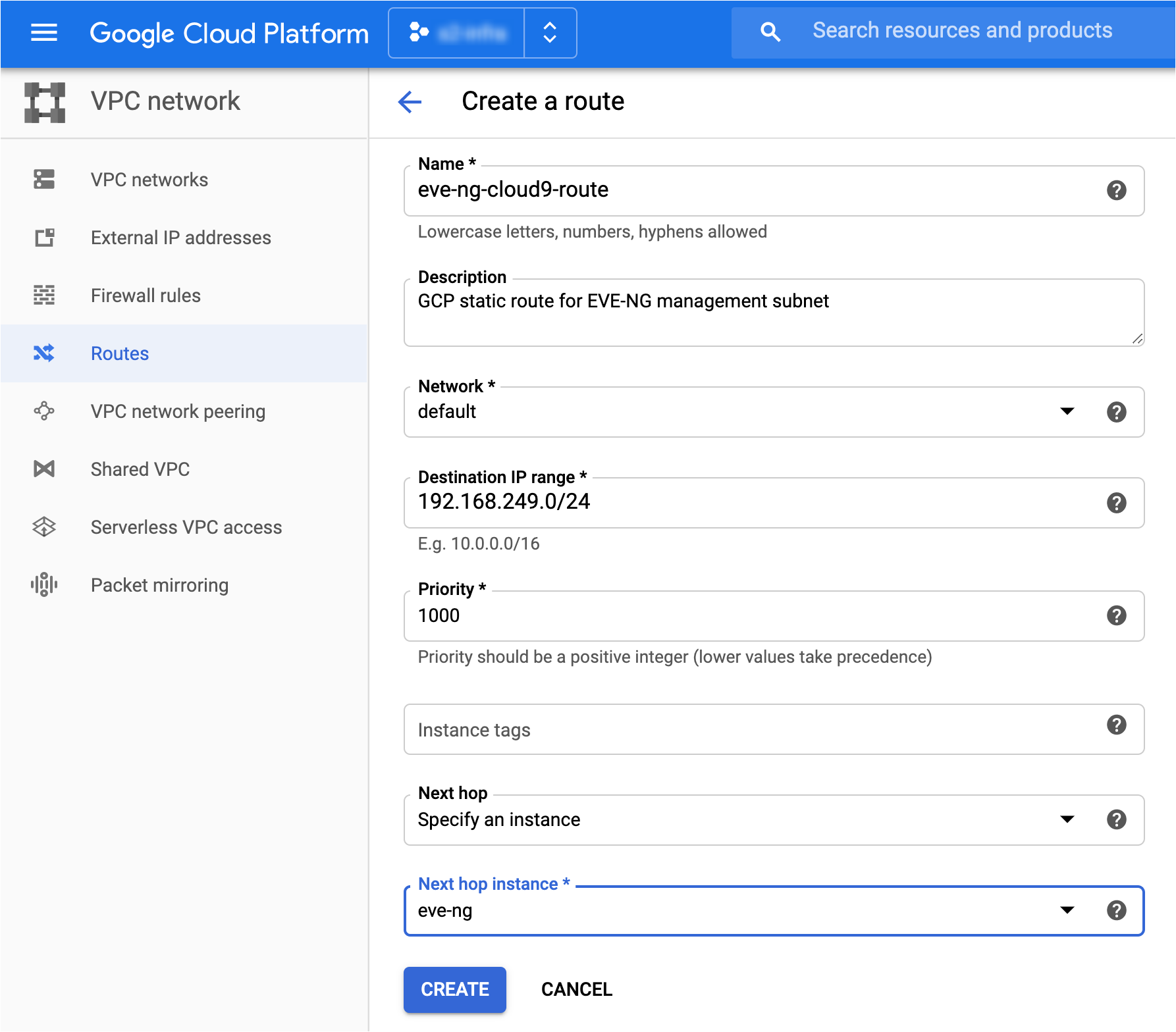

The "Create a route" landing page appears as shown in the screenshot below. Enter the following configuration details as follows:

- Name: Provide a descriptive name for the route (e.g. "eve-ng-cloud9-route").

- Description: Provide an optional description for the route.

- Network: Specify the GCP Network to which this route applies (e.g. "default").

- Destination IP range: This is where we specify the management subnet used by our EVE-NG topology (e.g. "192.168.249.0/24").

- Priority: Unless required to tweak this value, leave the value intact at "1000".

- Next hop: From the dropdown list, we have an option to specify either an IP address or a GCP VM instance as a next hop for the destination IP range. If your EVE-NG instance uses a DHCP-assigned IP address, then it's best to choose "Specify an instance" as the dropdown value. This way, the next hop will always point to EVE-NG even if another IP address gets assigned to EVE-NG via DHCP.

- Next hop instance: Here, specify your EVE-NG GCP VM instance as the next hop.

- Click on the "Create" button to continue.

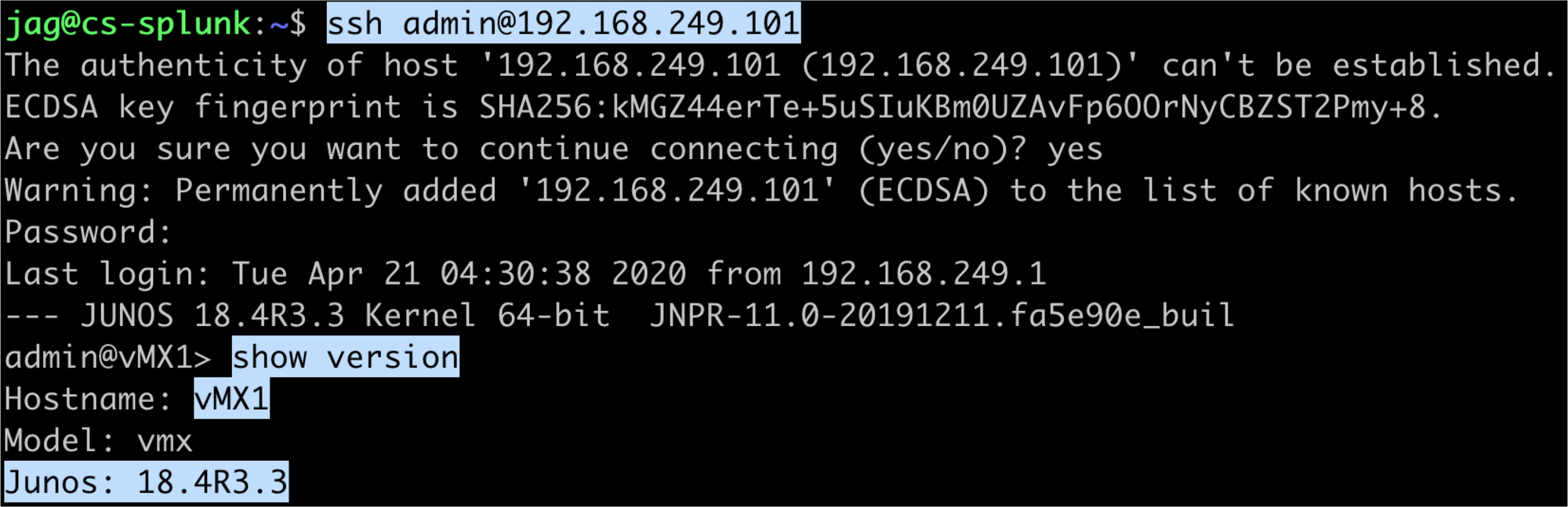

To test it out, logon to one of your external GCP VM instances and try to connect to one of your EVE-NG topology routers. For example, in my setup, I want to be able to send Syslog messages from my EVE-NG routers to an external Splunk instance running in my GCP lab. So, as shown in the screenshot below, I logged on to the Splunk VM and was able to SSH to the Juniper vMX router (192.168.249.101).

And that's all there is to it! Your EVE-NG topology can now serve as an extension to your existing GCP infrastructure for testing and/or prototyping purposes. In the next blog post (coming soon!), we will explore how we can connect our EVE-NG topology nodes to the Internet.